Introduction

Apache Kafka is an open-source distributed streaming platform developed by Linkedin and donated to Apache Software Foundation. It is robust, scalable horizontally, also it has a flexible architecture.

Kafka ingests the data for storing data in a limited time and this data can be used by multiple teams in a company. This can create a vulnerably situation in terms of data accessibility. When Kafka Cluster starts onboarding some critical and confidential information, security implementation becomes primary importance.

Kerberos security for Kafka is an optional feature. When security is enabled, features include:

- Authentication of client connections (consumer, producer) to brokers

- ACL-based authorization

Kafka Security has three components:

- Encryption of data in-flight using SSL / TLS: This allows your data to be encrypted between your producers and Kafka and your consumers and Kafka. This is a very common pattern everyone has used when going on the web. That’s the “S” of HTTPS (that beautiful green lock you see everywhere on the web).

- Authentication using SSL or SASL: This allows your producers and your consumers to authenticate to your Kafka cluster, which verifies their identity. It’s also a secure way to enable your clients to endorse an identity. Why would you want that? Well, for authorization!

- Authorization using ACLs: Once your clients are authenticated, your Kafka brokers can run them against access control lists (ACL) to determine whether or not a particular client would be authorized to write or read to some topic.

Kerberos is a kind of computer network authentication protocol that works on the basis of tickets to allow nodes communicating over a non-secure network to prove their identity to one another in a secure manner.

This topic is explaining that Apache Kafka (parcel version is 2.2.1) security configuration can be done using Cloudera Manager easily on a secured CDH 6.3.0 cluster.

Kafka service configuration on Cloudera Manager

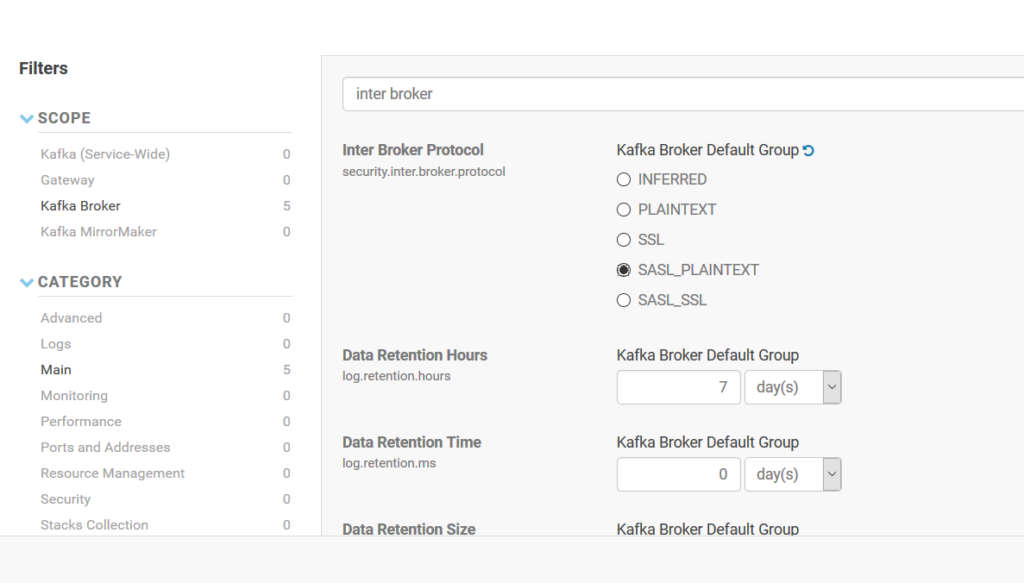

Due to being aware of each broker servers, Broker-to-Broker communication protocol should be defined on Kafka. security.inter.broker.protocol property is used for this purpose. SSL encryption is used just for Client-to-broker communication, but Broker-to-Broker communication is defined as PLAINTEXT. Due to SSL has a performance impact, Brokers’ communication is held on plain text.

Kafka Service Configurations

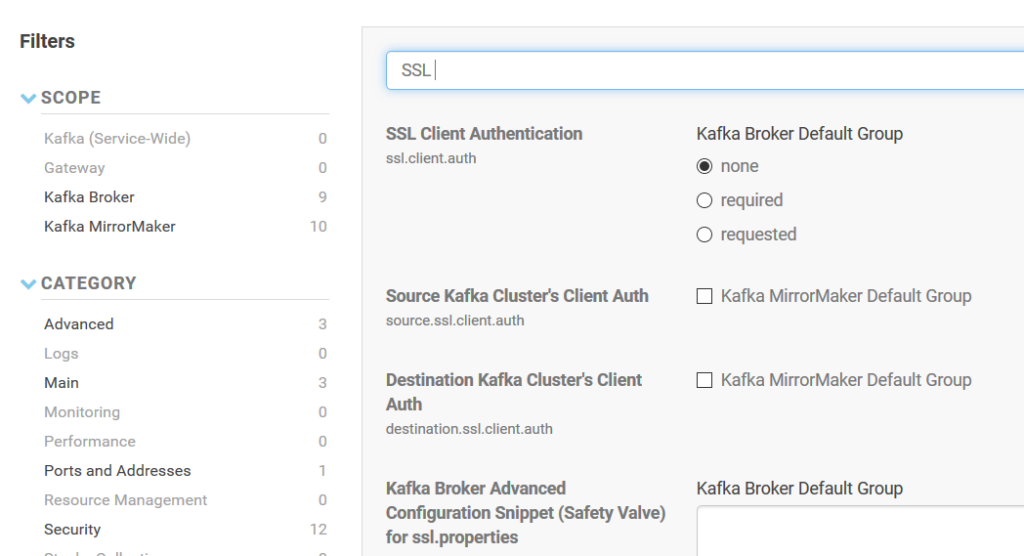

On the Kafka configuration page of Cloudera Manager, SSL Client Authentication property (ssl.client.auth) should be set as none.

Inter Broker Protocol (security.inter.broker.protocol) is set ad SASL_PLAINTEXT.

***These changes require a restart of Kafka service.

To do some operations on a topic (read, write, etc.), user definition should be done under Kafka and Sentry configuration pages of Cloudera Manager. Under Kafka service, the user who has kinit permission should be defined as superuser.

Another security configuration under Sentry should be done on Cloudera Manager. Apache Sentry is a granular, role-based authorization module for Hadoop. Sentry provides the ability to control and enforce precise levels of privileges on data for authenticated users and applications on a Hadoop cluster.

Sentry is designed to be a pluggable authorization engine for Hadoop components. It allows you to define authorization rules to validate a user or application’s access requests for Hadoop resources. Sentry is highly modular and can support authorization for a wide variety of data models in Hadoop.

The user name is added into the Admin Group section and Allowed Connecting Users sections.

The Java Authentication and Authorization Service (JAAS) login configuration file is used by applications in order to establish Kerberos authentication. This file contains one or more entries that specify authentication technologies. In addition, the login configuration file must be referenced either by setting the java.security.auth.login.config system property or by setting up a default configuration using the Java security properties file.

If the cached credentials approach would be used when running the application, jaas file contains the below configuration.

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useTicketCache=true;

};If the keytab approach would be used , then below configuration can be used.

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/home/<user>/mykafkaclient.keytab"

principal="mykafkaclient/[email protected]";

};In order to use keytab approach, keytab file should be generated as below OS command-line scripting.

$ ktutil

> add_entry -password -p <user> -k 1 -e aes256-cts-hmac-sha1-96

> wkt <user>.keytab

> quit

$ kinit -kt <user>.keytab <user>For console testing, two security parameters should be used for Kerberos authentication.

security.protocol=SASL_PLAINTEXT

sasl.kerberos.service.name=kafkaThese parameters can be saved into a file called Client.properties.

In order to test the Kafka producer console or Kafka consumer console, the client properties file will be used as a parameter. Before the running console tools, the user should obtain a Kerberos ticket-granting ticket (TGT) using kinit command.

$kinit userTo verify jaas.conf file as system environment variable, below command is used.

export KAFKA_OPTS="-Djava.security.auth.login.config=/home/user/jaas.conf"The producer and consumer console tools can be tested with these preparations.

$kafka-console-producer --broker-list broker-host:9092 --topic test-topic --producer.config client.properties$kafka-console-consumer --bootstrap-server broker-host:9092 \

--topic sample-topic \

--from-beginning \

--consumer.config client.properties \

--formatter kafka.tools.DefaultMessageFormatter \

--property print.key=true \

--property print.value=true \

--property key.deserializer=org.apache.kafka.common.serialization.StringDeserializer \

--property value.deserializer=org.apache.kafka.common.serialization.StringDeserializerValidation and expiration date on TGT can be checked using klist command.

When producer is running, TGT sharing can be seen on the console log.

If the Kafka Consumer and Producer API will be used on the application layer, the same configurations should be embedded in the application as properties.

config.setProperty(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

config.setProperty("sasl.kerberos.service.name", "kafka");

config.setProperty("sasl.mechanism", "GSSAPI");Neither ProducerConfigs nor ConsumerConfigs classes, CommonClientConfigs class is used for security config setting.

Other required parameters are added as JVM parameters on application.

java -Djava.security.auth.login.config=/home/<user>/jaas.conf

-Djava.security.krb5.conf=/etc/krb5.conf

-Djavax.security.auth.useSubjectCredsOnly=false

-cp sample.jar MainAppIn terms of the Kafka Streaming application, the same manner would be followed.

config.put(StreamsConfig.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

config.put("sasl.kerberos.service.name", "kafka");

config.put("sasl.mechanism", "GSSAPI");